eNews, Technology

The rising threat of deepfake fraud

Artificial intelligence is gradually becoming a mainstay for many credit managers, with automated credit processes powered by AI supercharging the work of many credit departments. However, with this new technology comes new anxieties around fraud. It is important to note that just as easily as AI can assist your work as a credit manager, it can also be manipulated by fraudsters who can use the new tech to target your business.

Why it matters: With deepfake technology, anyone can use AI to generate a realistic image, video or audio recording. Whether it is a spoofed phone call that sounds convincingly real or a website full of AI-generated images used to give the impression of a legitimate business, fraudsters have taken advantage of deepfake technology, leading credit departments everywhere to arm their defenses against the growing threat.

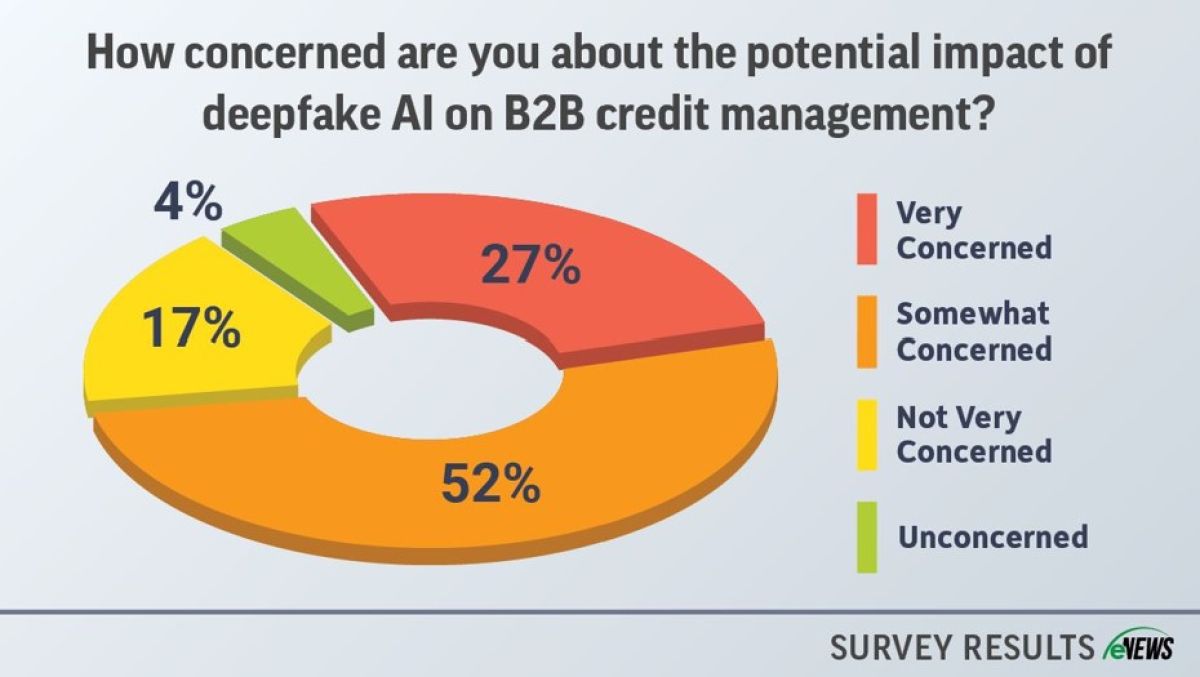

By the numbers: According to an eNews poll, 27% of credit managers are very concerned about the potential impact of deepfake AI on B2B credit management. An additional 52% report that they are somewhat concerned, with only 21% reporting that they are either not very concerned or unconcerned. Despite a stark majority of credit managers in agreement that deepfake technology poses a threat, there is not yet a consensus on how to protect your company from deepfake fraud.

As credit managers form assess the threat of deepfake fraud, it is important that they grasp the full scope of the technology’s power. Deepfake technology can undermine the credit approval process because of how easily fraudsters can create forged images and videos.

“When deciding on the credit limits to extend to customers we rely on the information from customers, for example, which might be in the form of a credit application, bank details, authorized signatory details or information from their website,” said Abdul Raheem Peedika Kandy, CICP, credit manager at Manlift Middle East LLC (Dubai, UAE). “We are looking at technology that can mimic all this information.”

While many credit managers may understand how deepfake technology can be used to commit fraud, it can still be very difficult to recognize it. “We need to accept that every aspect we consider in credit can be replicated or forged,” Peedika Kandy said. “So, a fraud can be generated by a system, and as credit managers, it is our responsibility to be diligent enough to be able to identify and prevent it.”

Deepfakes can even undermine over-the-phone contact, with AI mimicking the voice of your customer from a spoofed number.

“I know a case where fraudsters mimicked the voice of the credit personnel and left voice messages for their customers to get them to try to change their remittance,” Jon Hanson, CCE, CCRA, vice president, director of corporate credit at OVOL USA (Carrollton, TX). “And they did it after hours so that they got the voicemail, and it sounded just like that person.”

Credit departments must carefully consider how to prepare credit managers to spot deepfakes. While some may be able to recognize the uncanny sheen of computer-generated images, many might not yet realize this is something to look out for until it is too late. Even those less tech-savvy can have protocols in place that prevent major losses to fraudsters.

“We’ve been talking about deepfake AI in our accounting department because we had trouble with phishing in the last few years, as in people pretending to be customers over the phone and changing routing information,” said Tricia Crisman, CBA, accounting associate at Steelscape (Kalama, WA). “We talked about making sure that you’re not responding directly to an unknown email and that you’re going through your known contacts. We’ve adopted preventative measures and just really stop and think instead of hitting that panic button, which is where we’ve seen mistakes made in the past.”

While you might not automatically recognize that the voice on the other end of a phone call is a deepfake, you can always alert your team when a seemingly known customer is asking to change an address for a delivery or a routing number for payment.

As more is learned about deepfake technology, credit departments will likely develop a more advanced protocol, but exercising extra caution for remote interactions can help catch fraudsters early on.